I have observed that for tracks which are loudly mastered, the resampling can break badly. With the loudness war going into its 3rd decade now this is an issue which can be observed in almost any track.

The issue are the inter-sample peaks which apparently nobody in the industry takes seriously. (I don't care much about lossy compression but that makes things even worse.)

To check the relevance of the issue, I have checked my whole digital library of high quality tracks (some high-res, some CD quality) and what should I say: almost every album has intersample peaks! Here a screenshot of something current from foobars ReplayGain measurement, which measures intersample peaks:

The peaks shown here are in linear sacle, thus the value of 1.172368 equals +1.38dBFS!

All this compounds very badly when resampling is being thrown in on top of an already bad situation. Because resampling tries to follow the analog waveform reconstructed between the original digital samples. But if the analog waveform increases above 0dBFS due to samples which are previously below 0, and the resampling now hitting different points in time, since the sampling rate is different, meaning that there are now samples which would need to be above 0.

To make my case, I created myself a (perfectly valid (!)) digital test-track for being able to show objective measurements of the magnitude of the issue.

This is the test track I used for these measurements, it has intersample peaks of +1.25dBFS: here

And this is a version with the volume reduced by 1.25dB, such that there are no intersample peaks anymore: here

That here is a test track with intersample peaks of +3db, I have not used it for generating these plots, but it is the maximal amount of intersample peaks with a single test tone. Here is the same but reduced by 3dB.

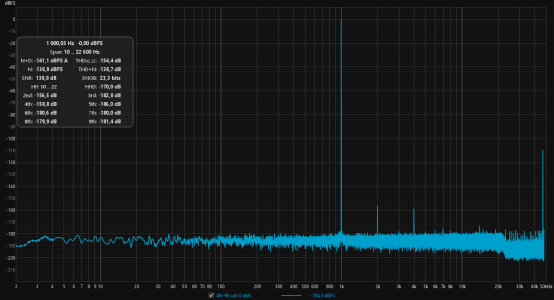

I measured directly the digital SPDIF output. Here is the measurement without resampling:

It can be seen that the track is perfectly transmitted via SPDIF as long as there is no resampling, it has a THD+N of -136dB!

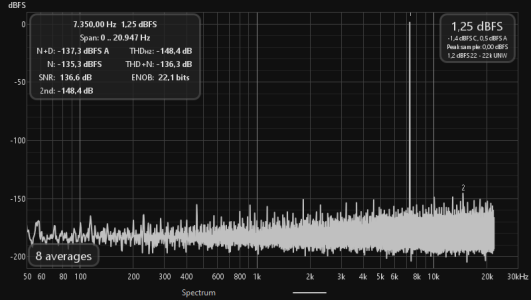

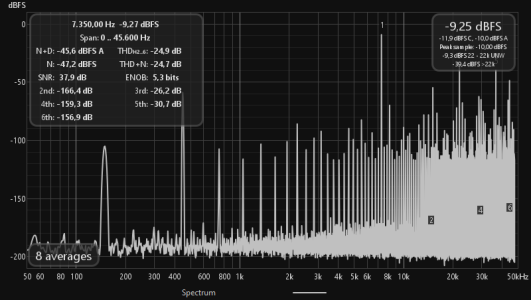

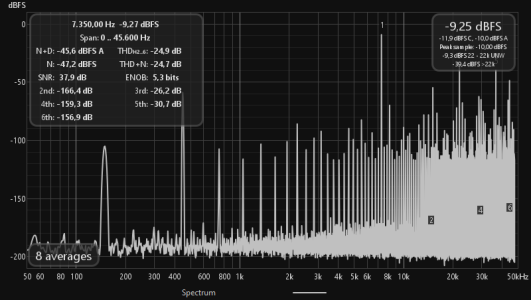

And here with resampling to 96k, by using the Fixed Resolution setting:

Look at the THD+N value, now it is incredibly bad at -24.7dB!

The solution to the problem would be for the WiiM to reduce the volume before resampling.

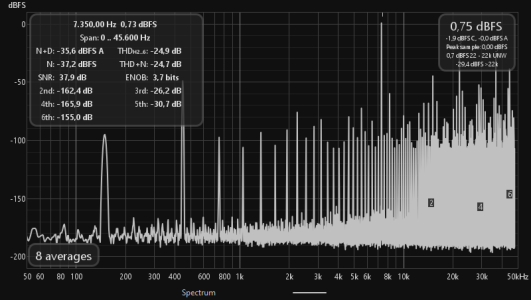

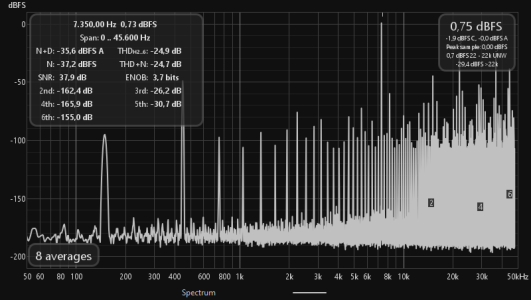

I emultate that here by using the version with reduced volume:

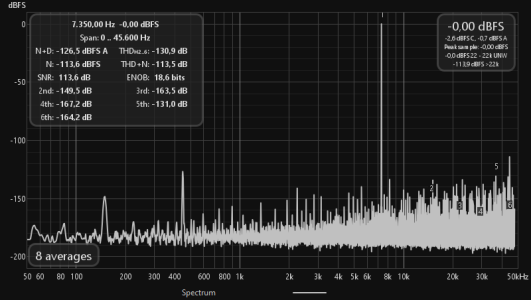

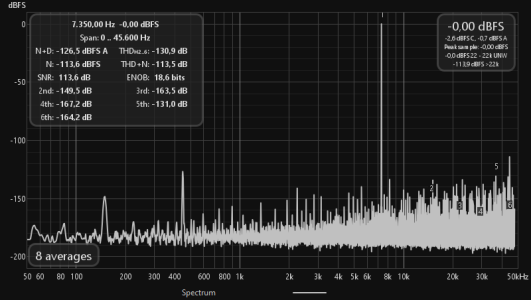

And no, neither the volume knob nor the pre-gain setting help. As can be seen here, those just reduce the volume, apparently both are applied after the resampling.

At volume setting 80, instead of 100:

And now with a pre-gain of -10dB:

Because of how bad the loudness war has come, the issue shown is basically relevant for almost anything mastered after the late 90s.

The only way to avoid this issue is to reduce the volume by a certain amount before any resampling is done. The amount of reduction should be 3dB.

I have shown what happens in the case of upsampling using the Fixed Resolution setting, however the same also happens when downsampling.

The issue are the inter-sample peaks which apparently nobody in the industry takes seriously. (I don't care much about lossy compression but that makes things even worse.)

To check the relevance of the issue, I have checked my whole digital library of high quality tracks (some high-res, some CD quality) and what should I say: almost every album has intersample peaks! Here a screenshot of something current from foobars ReplayGain measurement, which measures intersample peaks:

The peaks shown here are in linear sacle, thus the value of 1.172368 equals +1.38dBFS!

All this compounds very badly when resampling is being thrown in on top of an already bad situation. Because resampling tries to follow the analog waveform reconstructed between the original digital samples. But if the analog waveform increases above 0dBFS due to samples which are previously below 0, and the resampling now hitting different points in time, since the sampling rate is different, meaning that there are now samples which would need to be above 0.

To make my case, I created myself a (perfectly valid (!)) digital test-track for being able to show objective measurements of the magnitude of the issue.

This is the test track I used for these measurements, it has intersample peaks of +1.25dBFS: here

And this is a version with the volume reduced by 1.25dB, such that there are no intersample peaks anymore: here

That here is a test track with intersample peaks of +3db, I have not used it for generating these plots, but it is the maximal amount of intersample peaks with a single test tone. Here is the same but reduced by 3dB.

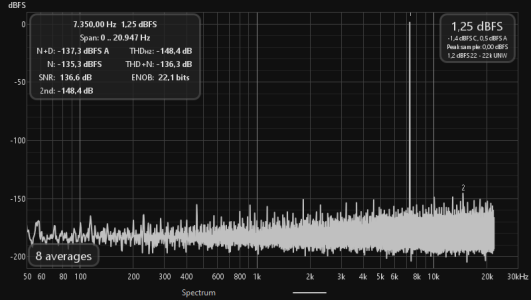

I measured directly the digital SPDIF output. Here is the measurement without resampling:

It can be seen that the track is perfectly transmitted via SPDIF as long as there is no resampling, it has a THD+N of -136dB!

And here with resampling to 96k, by using the Fixed Resolution setting:

Look at the THD+N value, now it is incredibly bad at -24.7dB!

The solution to the problem would be for the WiiM to reduce the volume before resampling.

I emultate that here by using the version with reduced volume:

And no, neither the volume knob nor the pre-gain setting help. As can be seen here, those just reduce the volume, apparently both are applied after the resampling.

At volume setting 80, instead of 100:

And now with a pre-gain of -10dB:

Because of how bad the loudness war has come, the issue shown is basically relevant for almost anything mastered after the late 90s.

The only way to avoid this issue is to reduce the volume by a certain amount before any resampling is done. The amount of reduction should be 3dB.

I have shown what happens in the case of upsampling using the Fixed Resolution setting, however the same also happens when downsampling.

Last edited: