There's never been one commonly agreed on standard for measuring output power, though.

Different amplifier topologies have different limitations depending on the measuring signal and procedure used, so much is clear. The real question is, which way is mostly relevant for reproducing music? And even this isn't all that easy to answer.

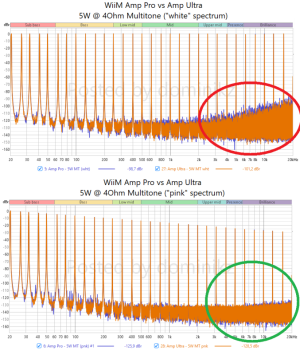

Are we asking for a standard where the amp must be able to output any single frequency from 20 Hz to 20 kHz with its rated power? Nobody ever needs 100 W at 20 kHz for home Hi-Fi. Are we asking for a signal containing "all" (or many?) frequencies from 20 Hz to 20 kHz? If so, at what spectral distribution? Pretty much the hardest test to pass for class-D amplifiers is a multi-tonetest (equally distributed power) at low distortion and the potential problems mainly stem from the treble region. However, such a test doesn't tell much about how and if the amp can cope with high power output below, say, 50 Hz (which is pretty interesting with real music and real customer expectations.

There's no doubt that the power figures provided for many of the cheap class-D desktop amps are ridiculous and simply technically impossible. At the same time it's also impossible to provide one single number that would describe an amplifier's capabilities.