I definitely don't understand what the abstracted digital layer of data might be.... "regular" network switches are designed with tolerances to keep the abstracted digital layer of data intact ...

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

My recommendations for using a WiiM Pro

- Thread starter Melomane

- Start date

BowsAndArrows

Trusted Contributor

the 1s and 0s... that are actually physically being carried by (analog) squared off electrical waves in the case of LAN cables.I definitely don't understand what the abstracted digital layer of data might be.

BowsAndArrows

Trusted Contributor

you can, of course find a few cheap DIY solutions as well to try and isolate your streamer from the rest of the LAN's noise. not necessary to explore these ideas with expensive branded equipment...yes these 1:1 isolation transformers in the network switches are designed to block the common mode interference, but allow the differential signals carried by the LAN cables through (iirc think over 2 pairs of wire).

but the way i understand it (i could be wrong) "regular" network switches are designed with tolerances to keep the abstracted digital layer of data intact, so they don't eliminate that much common mode interference. since at the end of the day they are networking equipment first, and were not built from the ground up for audio.

this is where things get a bit hairy because people disagree as to what level of common mode interference is audible etc. but if we put that aside, basically only special shielded switches (like those for medical or industrial use) are any good at eliminating this common mode interference. those and obviously the $9000 audiophile ones.

So, even if we would accept that for a moment, the reverse of it was that tighter tolerances would not keep the "1s and 0s" intact?the 1s and 0s... that are actually physically being carried by (analog) squared off electrical waves in the case of LAN cables.

The digital signal (and yes, there are digital signals, of course, and why they are called such is very well defined and not for debate amongst scientists) is the only source of what's going to be reproduced as music. The only task in the digital domain is not to lose or change the order of any of those high values and low values and that's it.

Even if Hans Beekhuyzen might imply otherwise (he doesn't really say loud and clear), there is no time information within the digital signal (so there's nothing that could be "smeared"). The digital signal just contains high and low values and the receiving part has to know how to interpret them (including the timing), either through negotiation or inherently by definition of a fixed protocol.

Cleverly (maybe), he always only comes back to (simulated) samples of S/PDIF when he tries to explain how a digital signal would always be "analog" and gives the impression that the digital content is highly at danger wherever digital signals are transmitted. Quite the contrary is true. The pure reason why digital bet analog in every way is that the exact form of the signal doesn't matter, as long as it isn't too heavily misshaped.

With a 5 GHz WiFi signal carrying all sorts of data simultaneously (including actual audio data) there is no direct equivalence between the waveform and the digital audio data, at all. Also, when the same digital payload is transmitted through a LAN cable instead, there is no "electric wave" propagating within that cable, at all.

Incorrectly equating the "digital audio signal" with the signal being physically transmitted and making you think of it as "the waveform that gets distorted" might be the key fault in Beekhuyzens contribution to the discussion.

ba no...certain protocols concerning us integrate temporal elements, "a clock"..whether they are important or not because reprocessed at the input of the next element is another subject... , see main topic..So, even if we would accept that for a moment, the reverse of it was that tighter tolerances would not keep the "1s and 0s" intact?

The digital signal (and yes, there are digital signals, of course, and why they are called such is very well defined and not for debate amongst scientists) is the only source of what's going to be reproduced as music. The only task in the digital domain is not to lose or change the order of any of those high values and low values and that's it.

Even if Hans Beekhuyzen might imply otherwise (he doesn't really say loud and clear), there is no time information within the digital signal (so there's nothing that could be "smeared"). The digital signal just contains high and low values and the receiving part has to know how to interpret them (including the timing), either through negotiation or inherently by definition of a fixed protocol.

Cleverly (maybe), he always only comes back to (simulated) samples of S/PDIF when he tries to explain how a digital signal would always be "analog" and gives the impression that the digital content is highly at danger wherever digital signals are transmitted. Quite the contrary is true. The pure reason why digital bet analog in every way is that the exact form of the signal doesn't matter, as long as it isn't too heavily misshaped.

With a 5 GHz WiFi signal carrying all sorts of data simultaneously (including actual audio data) there is no direct equivalence between the waveform and the digital audio data, at all. Also, when the same digital payload is transmitted through a LAN cable instead, there is no "electric wave" propagating within that cable, at all.

Incorrectly equating the "digital audio signal" with the signal being physically transmitted and making you think of it as "the waveform that gets distorted" might be the key fault in Beekhuyzens contribution to the discussion.

and this aspect can be more or less degraded. ..

(and can be observed in the analog domain of this signal... like spdif protocol etc)

RME: Support

RME: Information about international support, Support Hotlines, E-Mail Support

my point just in reaction to the classic shortcut underlying all these discussions... of "just ones and zeros"

(well... we come across "dpll" settings on very recent dacs highlighted on "asr" ;-) )

Last edited:

The digital signal might carry a click signal used by the receiver. The digital data itself contains no information about time. You can play.it back at any speed without variation on pitch height.ba no...certain protocols concerning us integrate temporal elements, "a clock"..whether they are important or not because reprocessed at the input of the next element is another subject... , see main topic..

and this aspect can be more or less degraded. ..

(and can be observed in the analog domain of this signal... like spdif protocol etc)

RME: Support

RME: Information about international support, Support Hotlines, E-Mail Supportarchiv.rme-audio.de

my point just in reaction to the classic shortcut underlying all these discussions... of "just ones and zeros"

BowsAndArrows

Trusted Contributor

Yes, theoretically that is correct - if you try to block ALL the common mode noise, with a lot of chokes etc. you could end up affecting the differential mode currents that carry the digital signal enough to introduce errors into the bitstream.So, even if we would accept that for a moment, the reverse of it was that tighter tolerances would not keep the "1s and 0s" intact?

The digital signal (and yes, there are digital signals, of course, and why they are called such is very well defined and not for debate amongst scientists) is the only source of what's going to be reproduced as music. The only task in the digital domain is not to lose or change the order of any of those high values and low values and that's it.

Even if Hans Beekhuyzen might imply otherwise (he doesn't really say loud and clear), there is no time information within the digital signal (so there's nothing that could be "smeared"). The digital signal just contains high and low values and the receiving part has to know how to interpret them (including the timing), either through negotiation or inherently by definition of a fixed protocol.

Cleverly (maybe), he always only comes back to (simulated) samples of S/PDIF when he tries to explain how a digital signal would always be "analog" and gives the impression that the digital content is highly at danger wherever digital signals are transmitted. Quite the contrary is true. The pure reason why digital bet analog in every way is that the exact form of the signal doesn't matter, as long as it isn't too heavily misshaped.

With a 5 GHz WiFi signal carrying all sorts of data simultaneously (including actual audio data) there is no direct equivalence between the waveform and the digital audio data, at all. Also, when the same digital payload is transmitted through a LAN cable instead, there is no "electric wave" propagating within that cable, at all.

Incorrectly equating the "digital audio signal" with the signal being physically transmitted and making you think of it as "the waveform that gets distorted" might be the key fault in Beekhuyzens contribution to the discussion.

the nature of the signals is where we disagree the most, probably - the digital layer is an abstraction, because you can measue the voltage in the lines carrying the signal. See this excerpt from the wikipedia article you linked. or even just look at the waveform they have drawn - at any given moment in that signal, the voltage (for example) can take an infinite number of real values - it's analog at the physical layer, but digital at the abstracted layer...

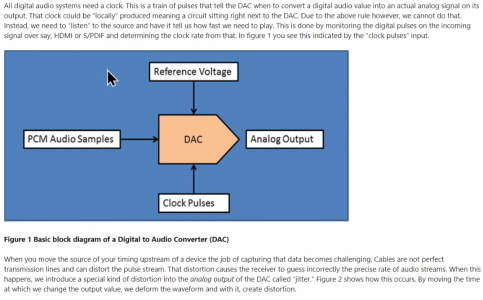

you're right when you say that the data in a normal LAN stream has no timing information and that the signal, even if really noisy in most cases it will not affect the stream of bits, and hence computers don't notice any issues with even noisy signals. but that's why it has to be "clocked" according to the sample rate (44.1kHz for example) as @canard mentioned artificially by audio equipment, once it gets into the chain.

(this screenshot is from the Audio Science Review youtube video on jitter!!

the most impotant clock in your system is the one which your DAC depends on at the time of D-A conversion which varies depending on how it recieves the audio data (e.g. in toslink the DAC dends on receiving clock information from the crystal in the transmitting device - unless they implemented a clever workaround in the DAC like chord did in their mojo2). anything that affects the timing (but also the reference voltage - most DACs use voltage-controlled crystal oscillators) will affect the eventual analog output...

a lot of what is in our discussion is a slight oversimplification for brevity... check out these videos that explain much better what i'm trying to get across. Ted smith did a series on timing, jitter and clocks that can give you an idea of what we believe. similarly goldensound did a comparison between DDCs with measurements to see what could be causing the differences between them.

I would expect every modern, well designed DAC to perform on a similar level at least. BTW, 2002 was for steadyclock, now it's an evolution - steadyclock fs.I see in that video that he mentions that feature was introduced by them in 2002, so not new. How prevalent do you think similar technology is in DACs say built after then, or 2010 or...?

Brantome

Major Contributor

- Joined

- Oct 20, 2022

- Messages

- 6,842

Somebody should tell HansI would expect every modern, well designed DAC to perform on a similar level at least. BTW, 2002 was for steadyclock, now it's an evolution - steadyclock fs.

steadyshot

Senior Contributor

- Joined

- Mar 14, 2023

- Messages

- 915

Somebody should tell Hans

For him Grimm MU2 achieves that independence from the source. If you think rme is expensive that is 18x more expensive…

In any case a manufacturer should not encouraged to think that the user’s dac will fix all the source’s shortcomings…

Brantome

Major Contributor

- Joined

- Oct 20, 2022

- Messages

- 6,842

Well, could I turn the question on its head? Which "modern, well designed DACs" (or say DACs in wide use) can't handle the jitter produced by WiiM streamers? Or has the market more than its fair share of poorly designed DACs?For him Grimm MU2 achieves that independence from the source. If you think rme is expensive that is 18x more expensive…

In any case a manufacturer should not encouraged to think that the user’s dac will fix all the source’s shortcomings…

I think that the real problem is an overestimated approach and an underestimated approach, looking for everything in audio in an exaggerated manner or in an underrated one. The fact that the RME interface can receive audio data from the streamer in the perfect, fully transparent way, doesn't mean that the streamer digital output is SOTA itself. And, on contrary, an existence of the jitter or unwanted noise on the output doesn't mean that it will destroy the quality of the signal on the receiver side, as there are ways to handle it.

BowsAndArrows

Trusted Contributor

yeh the guys at PS Audio have been talking about this stuff for donkey's years. they really are one of the best examples of a legacy company adopting new technologies at the right time and not losing focus on achieving excellence in performance.I see in that video that he mentions that feature was introduced by them in 2002, so not new. How prevalent do you think similar technology is in DACs say built after then, or 2010 or...?

others on the forum i'm sure know much about the implementations of PLL circuitry and the like in all these good DACs.

maybe WiiM could change that? as much as we discuss all these little details about how to configure our systems etc. for me the main reason i'm on here is because i can see a future where WiiM is the framework (modular laptop company) of digital audio - it could easily become the modders hardware of choice if they release more info to the community and decide to move down a more modular future...

of course i notice all the effort on the software side, and some of us again dream that WiiM can one day match roon or even better their offerings on the music/metadata/multiroom front... they have such a track record of delivering what the community wants/needs i wouldn't put it past them.

btw if there are any lifetime subscriptions for this roon-killer, then lemme know, haha!

steadyshot

Senior Contributor

- Joined

- Mar 14, 2023

- Messages

- 915

Well, could I turn the question on its head? Which "modern, well designed DACs" (or say DACs in wide use) can't handle the jitter produced by WiiM streamers? Or has the market more than its fair share of poorly designed DACs?

All I am saying is that efforts for good implementation should be on both designs , streamer and dac

Yes, but you can also compensate one's device weaknesses with another one capabilities. Having a DAC with an effective jitter suppression might be more beneficial than using hyper ultra expensive streamer connected to not so well designed DAC. It can be seen in the GoldenSound movie how effective PLL loops can be.All I am saying is that efforts for good implementation should be on both designs , streamer and dac

steadyshot

Senior Contributor

- Joined

- Mar 14, 2023

- Messages

- 915

Yes, but you can also compensate one's device weaknesses with another one capabilities. Having a DAC with an effective jitter suppression might be more beneficial than using hyper ultra expensive streamer connected to not so well designed DAC. It can be seen in the GoldenSound movie how effective PLL loops can be.

Ok.. Unless someone believes that a dac has a sound signature and wants to extract as much as possible

You mean, unless someone believes in miracles just because the term "sound signature" is so sexy, not because there could be a reason?Ok.. Unless someone believes that a dac has a sound signature and wants to extract as much as possible

Similar threads

- Replies

- 6

- Views

- 585

- Replies

- 1

- Views

- 780

- Replies

- 40

- Views

- 25K

- Replies

- 359

- Views

- 79K

- Locked

- Replies

- 2K

- Views

- 275K