What for? I just shown that there is no conversion from 16 bit to 24 bit for fixed resolution, if there is no sample rate change. Why volume attenuation is important here?Are you applying any volume attenuation?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Issue with Distortion at Very Low Volume When Using WiiM Ultra with External DAC

- Thread starter AssafAviv

- Start date

I'll use Wikipedia as I don't remember the details off hand: Byte 4, one bit indicates whether the base length is 20 or 24 and the following 3 bits indicate how much to subtract roughly.Where, exactly?

16b is 20b minus 4 typically.

Well, not exactly. No way to add digital headroom.Ok, that's indeed the sane place to do it.

The entire point of the conversation was about the effect of volume control, mate. Of course converting 16b to 24b isn't going in itself to change anything to the signal, nobody ever argued that.What for? I just shown that there is no conversion from 16 bit to 24 bit for fixed resolution, if there is no sample rate change. Why volume attenuation is important here?

The argument here is that large attenuation will cause a significant loss on 16b content if it's also transmitted as 16b content over spdif. It can be avoided using fixed resolution to force a bump to 24 provided WiiM does the bump before the attenuation which you seem to indicate they do, good. Though using fixed resolution unfortunately brings resampling into the picture too which in that case isn't desired

And WiiM sends always marking that 4 additional bits are used for the audio data. 24 - 4 is not 16.I'll use Wikipedia as I don't remember the details off hand: Byte 4, one bit indicates whether the base length is 20 or 24 and the following 3 bits indicate how much to subtract roughly.

16b is 20b minus 4 typically.

Why would you at that point in the chain ? I don't get it. If you were to add zero bits at the top instead of scaling the bottom (ie, adding digital headroom as you call it) you would introduce some volume attenuation as a side effect of the conversion which here too is not desired.Well, not exactly. No way to add digital headroom.

If you don't add a digital headroom, the resampling process which is an ASRC in fact, is prone to clipping. This is due to the fact that the process is similar to the chain of DA and AD conversions. There are strong clipping artifacts for intersample overs for example. And it happens indeed if this behavior didn't change after the last FW update.Why would you at that point in the chain ? I don't get it. If you were to add zero bits at the top instead of scaling the bottom (ie, adding digital headroom as you call it) you would introduce some volume attenuation as a side effect of the conversion which here too is not desired.

In the context of resampling then yes, you need a bit of headroom. You can also process the resampling as 32b and clamp back at the output. But here too we don't know what WiiM is doing really and none of this impacts the basics of this discussion which is about the deleterious effects of attenuation on spdif outputs especially in absence of fixed resolutionIf you don't add a digital headroom, the resampling process which is an ASRC in fact, is prone to clipping. This is due to the fact that the process is similar to the chain of DA and AD conversions. There are strong clipping artifacts for intersample overs for example. And it happens indeed if this behavior didn't change after the last FW update.

A volume attenuation is not my whole life. You've asked, I answered and pointed out the unwanted side effects.But here too we don't know what WiiM is doing really and none of this impacts the basics of this discussion which is about the deleterious effects of attenuation on spdif outputs especially in absence of fixed resolution

I guess you can see the effective bits value in REW, can't you? I mean effective bits as shown there.Again what on earth do you define by effective bits ?

Exactly.You mean the RME DAC ignores the former and counts the number of zero bits in the sample data ?

I still doesn't quite add up. You want headroom for the resampling algorithm but doing so by placing the attention before the conversion is not going to help. Yes you may end up getting some headroom as a side effect of the volume not being 100% but stiff shit if it is ans if it isn't you trade that for dynamic range.A volume attenuation is not my whole life. You've asked, I answered and pointed out the unwanted side effects.

Anyways this discussion is becoming rather pointless at this stage and far from the original conversation subject

What would be nice for those using external DACs would be for WiiM to support DAC level attenuation when on USB and the DAC supports it and to support upscale to 24b without resampling as an option for those who don't.

In the meantime, keep the volume down further down the chain (amp gain, DAC, ...) and keep the WiiM volume range restricted. I keep my gain low on the Apollon to avoid blowing my speakers accidentally anyways

What can I say... maybe... read the RME DAC manual?Not only that would be a gross protocol violation but it should be clearly visible as 16b content with attenuation would then display on the DAC as >16b ... Do we have any evidence of that ever happening ?

I just had a look at the ADI 2 manual and I can't see anything in there along what you seem to imply ... No particular mention of how the SPDIF sample size is obtained or anything of the sort other that it's compliant with the relevant standard. Do you have a more specific reference ?What can I say... maybe... read the RME DAC manual?

I don't understand why you don't understand but, believe me or not, it helps. In any case when the resampling process is going to produce samples above 0 dBFS, and there is no space for it.I still doesn't quite add up. You want headroom for the resampling algorithm but doing so by placing the attention before the conversion is not going to help.

"The Bit column shows the amount of bits found in the SPDIF audio signal. Note that a 24 bit signalI just had a look at the ADI 2 manual and I can't see anything in there along what you seem to imply ... No particular mention of how the SPDIF sample size is obtained or anything of the sort other that it's compliant with the relevant standard. Do you have a more specific reference ?

that is shown as 16 bit is indeed 16 bit, but a signal shown as 24 bit might contain only 16 bit real

audio plus 8 bits of noise…"

Do you have any experience with RME gear?

Sure I understand that, though not particularly far above that, so you need headroom but not a ton of it. Though that's only an issue if your source content uses the full dynamic range... Sadly loudness wars probably ensure that is way more often the case than it should be.I don't understand why you don't understand but, believe me or not, it helps. In any case when the resampling process is going to produce samples above 0 dBFS, and there is no space for it.

That said I still maintain that the user volume control isn't the right spot, for all the reasons explained above.

I'd rather you add yourself an extra zero bit at the top before resampling and scale down at the end, a very different approach. It will still result in a little bit of extra attenuation but can prevent some of the loss and avoid amplifying the distortion caused by that loss in the resampling process

That doesn't in itself say much about what the DAC really does. If anything I would read it as the RME using *fewer* bits that what's indicated ...."The Bit column shows the amount of bits found in the SPDIF audio signal. Note that a 24 bit signal

that is shown as 16 bit is indeed 16 bit, but a signal shown as 24 bit might contain only 16 bit real

audio plus 8 bits of noise…"

Do you have any experience with RME gear?

Fact remains, if the WiiM says there is only 16 bits in there it wouldn't make sense for a DAC to try to extract more, that would just be junk.

Which brings us back to square 1. 16b source + attenuation+ 16b SPDIF output *WILL* lose resolution, potentially significantly at high attenuation, regardless of what your magic RME DAC does or does not. The data simply isn't there.

And no I have never used an RME DAC but I don't see how that would matter in that specific case

It would give you a chance to understand the meaning of the citation.And no I have never used an RME DAC but I don't see how that would matter in that specific case

It tells that if an incoming signal is marked as 24 bits, but the stream is in fact in 16 bits, then 16 bits are shown as coming from an analysis of the stream.That doesn't in itself say much about what the DAC really does. If anything I would read it as the RME using *fewer* bits that what's indicated ....

Fact is that it does not happen.if the WiiM says there is only 16 bits

The Wiim does not output 24bits for 16bits inputs, as long as it is not forced to do so via the "Fixed Resolution" setting. The reason why there is the contradicting belief is becasue somewhere in the documentation, it is mentioned that the CALCULATION is done in 32 bits. This might be the case, however, this caluclated result is then rescaled back into the same bitdepth as that of the input as long as the "Fixed Resolution" setting is not enabled.

And yes I have the measurements to back this up!

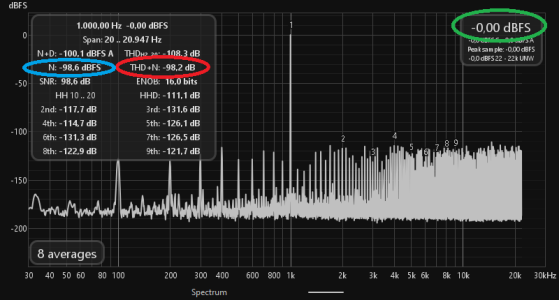

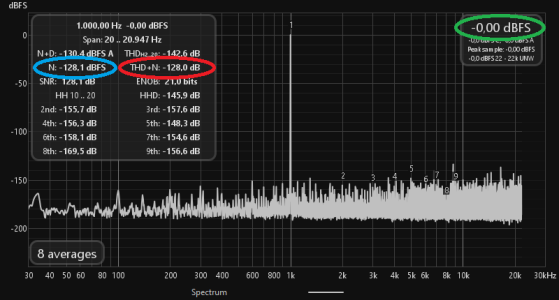

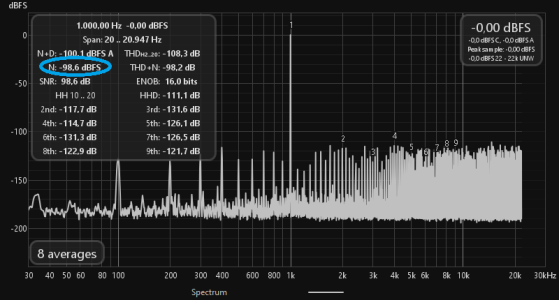

Here you see the spectrum of the playback of a 44.1kHz 16Bits file of the 1kHz sine at volume 100, measured directly on the digital SPDIF signal, with the "Fixed Resolution" setting disabled:

Upper right corner you have in green the volume which is set at max, and in blue the noise floor matching that of a 16 bits stream and in red the resulting THD+N.

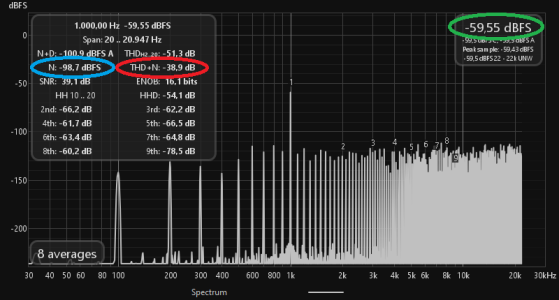

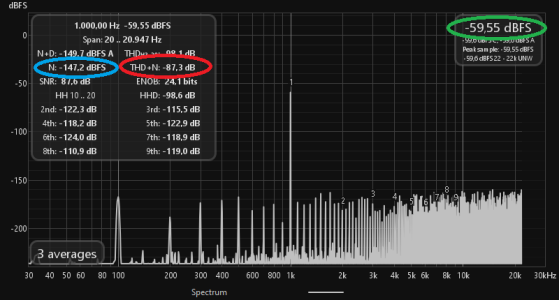

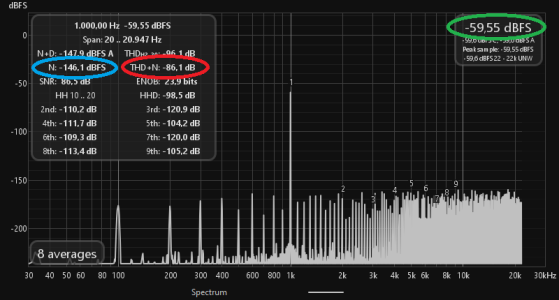

Now the same at a volume setting of 1:

This time the volume should be at -0.6*99=-59.4dB and indeed we see in green a value of -59.55dB. Observe the noise, it has not moved at all and is still at the floor of the 16 bits just as before, leading to a decreased THD+N of only 38.9dB. This leads to really very crappy quality, especially if the signal is not maxed out but if there are some more silent parts of the music. You will hear this noise floor if you go close to you speakers!

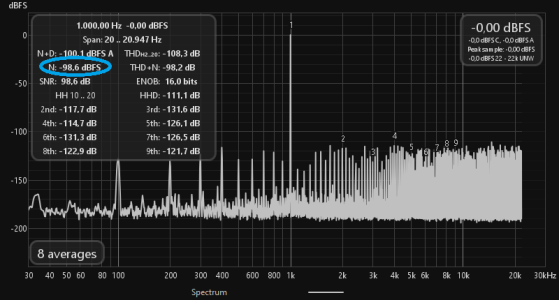

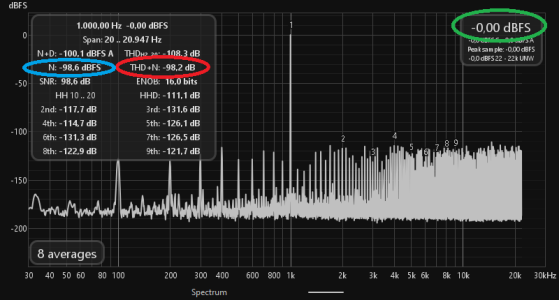

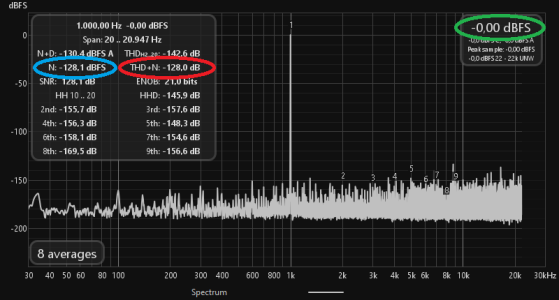

Now the same 44.1kHz 16 bits file but this time with the "Fixed Resolution" setting enabled at 44.1kHz and 24 bits.

Volume 100:

This leads to exactly the same measurement as before. The noise floor (blue) is still at the same level as before because I am using a 16 bits file which is now being converted to 24 bits, but this does not decrease the noise, that still there. This is expected.

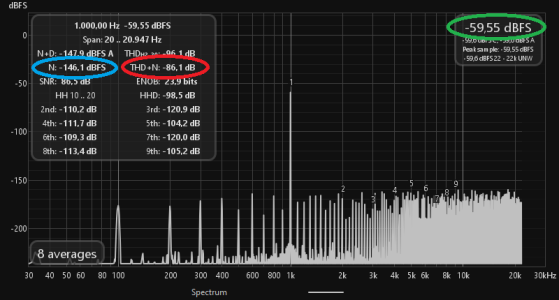

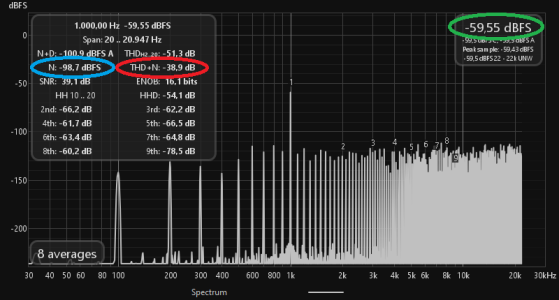

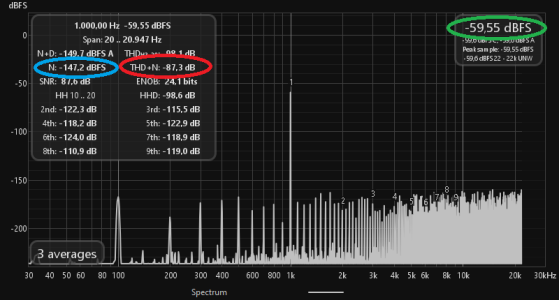

But now at a volume setting of 1, things change drastically:

We again have our singal at -59.55dB. But watch the noise floor, it has moved down! Because now, in constrast to the 16 bits stream, the 24 bits stream still has the room for that to also be decreased while the volume is decreased. And it does so until it hits the 24 bits floor where it remains pegged. This is why the THD+N (in red) is now much better than before, but is not as high as when volume is maxed out.

Now just for completness, lets play back a 24 bits file instead of a 16 bits file.

Volume 100:

Here, the noise floor is way below the 16bits level.

At a volume of 1:

Now this looks very similar to the 16 bits file converted to 24 bits at a volume of 1, becasue that is the limit of a 24 bits stream at this signal level.

Ok so I think I have shown beyond reasonable doubt, that the Wiim does not convert 16 bits input streams to 24 bits as long as it is not forced to do so by the "Fixed Resolution" setting.

Thus the suggestion in my previous post for anyone connecting the Wiim to a DAC while having the "Fixed Volume Output" setting disabled and changing the volume in the Wiim.

One could argue that the Wiim team should update the behaviour and let the stream to always be pushed to 24 bits whenever the "Fixed Volume Output" is disabled and the 24 bits support is enabled by the user in the Output Resolution setting. This would then be done without resampling as long as the "Fixed Resolution" is disabled.

And yes I have the measurements to back this up!

Here you see the spectrum of the playback of a 44.1kHz 16Bits file of the 1kHz sine at volume 100, measured directly on the digital SPDIF signal, with the "Fixed Resolution" setting disabled:

Upper right corner you have in green the volume which is set at max, and in blue the noise floor matching that of a 16 bits stream and in red the resulting THD+N.

Now the same at a volume setting of 1:

This time the volume should be at -0.6*99=-59.4dB and indeed we see in green a value of -59.55dB. Observe the noise, it has not moved at all and is still at the floor of the 16 bits just as before, leading to a decreased THD+N of only 38.9dB. This leads to really very crappy quality, especially if the signal is not maxed out but if there are some more silent parts of the music. You will hear this noise floor if you go close to you speakers!

Now the same 44.1kHz 16 bits file but this time with the "Fixed Resolution" setting enabled at 44.1kHz and 24 bits.

Volume 100:

This leads to exactly the same measurement as before. The noise floor (blue) is still at the same level as before because I am using a 16 bits file which is now being converted to 24 bits, but this does not decrease the noise, that still there. This is expected.

But now at a volume setting of 1, things change drastically:

We again have our singal at -59.55dB. But watch the noise floor, it has moved down! Because now, in constrast to the 16 bits stream, the 24 bits stream still has the room for that to also be decreased while the volume is decreased. And it does so until it hits the 24 bits floor where it remains pegged. This is why the THD+N (in red) is now much better than before, but is not as high as when volume is maxed out.

Now just for completness, lets play back a 24 bits file instead of a 16 bits file.

Volume 100:

Here, the noise floor is way below the 16bits level.

At a volume of 1:

Now this looks very similar to the 16 bits file converted to 24 bits at a volume of 1, becasue that is the limit of a 24 bits stream at this signal level.

Ok so I think I have shown beyond reasonable doubt, that the Wiim does not convert 16 bits input streams to 24 bits as long as it is not forced to do so by the "Fixed Resolution" setting.

Thus the suggestion in my previous post for anyone connecting the Wiim to a DAC while having the "Fixed Volume Output" setting disabled and changing the volume in the Wiim.

One could argue that the Wiim team should update the behaviour and let the stream to always be pushed to 24 bits whenever the "Fixed Volume Output" is disabled and the 24 bits support is enabled by the user in the Output Resolution setting. This would then be done without resampling as long as the "Fixed Resolution" is disabled.

Last edited:

Similar threads

- Replies

- 2

- Views

- 455

- Replies

- 5

- Views

- 2K

- Replies

- 1

- Views

- 240